Blog

Testing Smarter, Not Harder: A guide to prioritising CRO experiments

Conversion Rate Optimisation (CRO) isn’t about running as many tests as you possibly can—it’s about running the right tests for your website. Prioritisation is what separates brands that see real revenue growth from those that waste time on low-impact changes. Without a clear strategy, your CRO efforts can become scattered, leading to inconclusive results and little-to-no uplift.

A common mistake I see eCommerce retailers make all too often is testing for the sake of it—changing button colours, tweaking copy, or experimenting with random design updates without understanding what influences user behaviour. This approach not only wastes resources but also slows down your revenue growth.

Effective CRO means identifying high-impact opportunities, structuring tests to provide clear actionable insights, and making data-driven decisions that directly improve conversions.

So let’s have a look at how to prioritise your CRO experiments for maximum impact:

- Where to focus first

- How to avoid common pitfalls

- Real-world examples of optimisations that have delivered measurable results

Prioritisation of High-Impact Experiments

Not all CRO experiments deliver the same value. Some tests will generate clear, measurable improvements, while others waste time on marginal gains. Prioritisation is key—focusing on the right tests ensures effort is spent where it matters most.

Focus on High-Traffic, Low-Conversion Areas

By identifying the biggest opportunities, running tests that are quick to implement, and letting data guide decisions (instead of gut instinct), you can drive meaningful conversion improvements without getting bogged down in endless experimentation.

Some pages attract plenty of traffic but fail to convert visitors into customers. These areas should be your priority for CRO experiments because they offer the biggest opportunity for improvement.

How to Identify High-Impact Areas

Start by analysing your website data. At Proof3, we typically start with Google Analytics to pinpoint pages where:

- Traffic is high, but conversion rates are below average

- Users drop off before completing key actions (e.g., checkout, form submissions)

- Bounce rates are significantly higher than site-wide averages

Focus on these areas first—optimising a page that gets thousands of visitors a day will always have a bigger impact than tweaking a page that only a handful of users see.

Why It Works

CRO is about maximising return on effort. Instead of spreading resources across your entire site, prioritise where small changes can lead to significant revenue gains.

For example, let’s say your product pages receive 60% of your traffic but have a 1.5% conversion rate. If a well-tested experiment improves that rate to 2%, the revenue lift could be substantial. Compare that to optimising a rarely visited FAQ page—the revenue impact wouldn’t even register.

Key Takeaways

✅ Start with high-traffic, low-conversion pages to maximise impact

✅ Use data to prioritise, not guesswork

✅ Look at behaviour-tracking tools to understand where users get stuck

Prioritise Quick Wins with Clear Outcomes

CRO isn’t just about big, complex experiments—it’s about making smart, data-backed changes that drive results quickly. While some high-impact tests require significant development work, others can be implemented fast and still deliver measurable improvements. The key is to focus on experiments that are both easy to execute and have a clear, quantifiable outcome.

Why Quick Wins Matter

Testing is only valuable if it leads to actionable insights. A test that takes months to develop and deploy might provide interesting data, but if it doesn’t move the needle on revenue or conversions, it’s a wasted effort. Prioritising quick wins allows you to continuously optimise without getting stuck in long development cycles.

For example, if data shows that users frequently drop off at the checkout stage, a quick test could involve adding trust signals (like payment security badges) or simplifying form fields. These are low-effort changes that can lead to immediate improvements in conversion rates.

How to Identify Quick Wins

To ensure you’re focusing on the right tests, ask:

- Is it easy to implement? If a test requires major backend changes, it’s not a quick win.

- Can we measure the outcome? If the impact isn’t quantifiable, the test won’t provide useful insights.

- Does data support this test? CRO should be led by analytics, not assumptions.

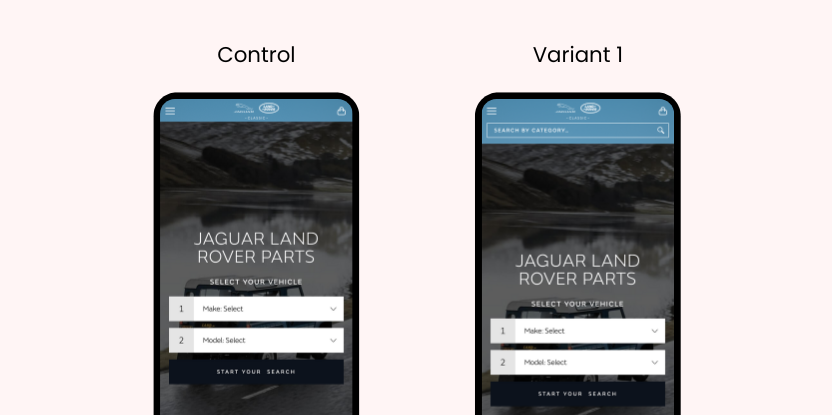

Quick Win Example: Improving Mobile Search Visibility

For this global car brand, we tested making the internal search bar more visible on mobile devices. We knew this was a potential ‘quick win’ as we knew that visitors using on-site search typically were twice as likely to convert. This small change had a massive impact—purchases increased by 25%. We then applied the same test to the desktop version, which also led to a 29% revenue boost.

This quick, low-effort change delivered clear, measurable results, proving the power of prioritising easy-to-implement experiments that directly improve conversions.

Key Takeaways

✅ Start with easy-to-implement tests that don’t require heavy development.

✅ Ensure every test has a clear, measurable outcome.

✅ Let data drive your decisions—focus on what users need, not what you assume will work.

By prioritising quick wins with clear outcomes, you can keep CRO efforts moving forward without unnecessary complexity.

Let Data Lead, Not Personal Bias

CRO is about improving conversions based on user behaviour, not personal opinions. Yet, one of the biggest obstacles to effective testing is bias—allowing assumptions, preferences, or gut feelings to dictate decisions rather than relying on data. When experiments are led by what feels right instead of what users actually do, brands risk wasting time on changes that don’t move the needle.

The Problem with Personal Bias in CRO

Everyone has opinions on what makes a “better” user experience—marketers, designers, developers, and even senior leadership. But just because a team believes something will work doesn’t mean it will. Assumptions can be misleading, and making decisions based on internal preferences rather than user data often results in ineffective tests.

For example, a brand may assume that adding a video to a product page will increase conversions because “video is engaging.” But if heatmaps show that users don’t interact with media or that page speed suffers as a result, this change could actually hurt sales rather than help them.

How to Remove Bias from CRO Decisions

To ensure testing decisions are objective and data-driven, follow these steps:

- Start with real user insights – Use tools like Google Analytics & Microsoft Clarity or session recordings to identify friction points.

- Validate assumptions before testing – If an idea is based on preference rather than user behaviour, challenge it.

- Trust the numbers, not opinions – If a test result contradicts expectations, accept the data rather than trying to justify an alternative explanation.

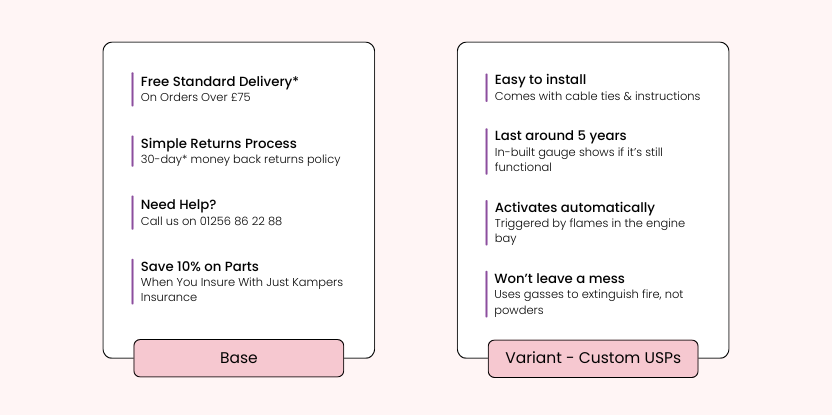

Example: Challenging internal assumptions to save wasted time & effort

One of our clients, a specialist automotive parts supplier, wanted to create unique selling points (USPs) across every single product page to increase product page-to-basket conversions – as opposed to using their standard USPs. This was a mammoth task that would require hundreds of hours of manual review. While not an unreasonable hypothesis it was something that needed to be tested first.

After running the test – in this case for 2 weeks to reach statistical significance – we saw that adding product-specific USPs had no significant impact on performance, ultimately saving the team a huge amount of time and effort. This experience highlighted how important it is to test assumptions rather than blindly follow them.

Key Takeaways:

✅ User behaviour should drive decisions, not opinions.

✅ Gather insights before running tests—don’t test based on assumptions.

✅ Be willing to accept results, even if they go against expectations.

By letting data lead, CRO efforts become more strategic, efficient, and—most importantly—effective.

“Best practices around design and UX are not truths set in stone. What works for one set of customers doesn’t always work for another. But they can be used as a good hypothesis to test.” — Joe Turner, Co-Founder of Proof3

Common CRO Pitfalls and How to Avoid Them

Even with a strong prioritisation framework, CRO can still go wrong. Many brands run tests that don’t deliver clear insights, waste time on ineffective experiments, or misinterpret results due to bias. Understanding these common mistakes—and knowing how to avoid them—will help ensure every test adds value.

Testing Everything at Once

One of the biggest mistakes in CRO is trying to test too many things at the same time. It’s tempting to believe that running multiple experiments across different pages or elements will accelerate results. In reality, this approach leads to vague insights and inconclusive data.

When too many tests overlap, it becomes difficult to pinpoint what’s driving changes in performance. If a business runs five different tests on its checkout page at once—changing the button colour, simplifying the form, adding trust signals, tweaking shipping options, and adjusting the CTA—it may see an uplift in conversions.

But which change made the difference? Without knowing, it’s impossible to replicate success elsewhere.

The Fix:

Instead of spreading your efforts thin, create a structured testing roadmap. Prioritise high-impact areas and run controlled experiments one at a time. This ensures each test provides clear learnings that can be applied across the site.

The Trap of Confirmation Bias

Many CRO tests fail because they are designed to confirm an existing belief rather than uncover the best solution. Confirmation bias happens when brands make changes they expect to work and then interpret data in a way that supports that expectation—rather than objectively analysing the results.

For example, a team might believe that reducing the number of checkout steps will increase conversions. If they run a test and see a slight uplift, they may declare the change a success without considering other factors—such as whether the uplift was within normal traffic fluctuations or whether a more detailed checkout process might work better for their audience.

The Fix:

To remove confirmation bias, challenge assumptions before testing. Set up experiments that explore multiple variations, not just the one you think will win. Ensure statistical significance before making changes, and be open to results that contradict expectations.

Not Controlling Variables

For a test to provide reliable insights, it needs to isolate a single variable. If multiple elements change at once, there’s no way to know which one influenced the result. Yet, many businesses make the mistake of running multivariate tests without a clear structure, leading to inconclusive outcomes.

For example, if an A/B test changes both the headline and the call-to-action on a landing page, and conversions increase, was it the new headline that made the difference? Or the CTA? Without a controlled test, there’s no way to know.

The Fix:

Stick to one variable per test wherever possible. If testing multiple elements is necessary, use a multivariate testing approach with structured variations to ensure clean data. The goal isn’t just to see improvement—it’s to understand why an improvement happened, so it can be replicated elsewhere.

By avoiding these common pitfalls, CRO tests become more reliable, actionable, and effective in driving real growth.

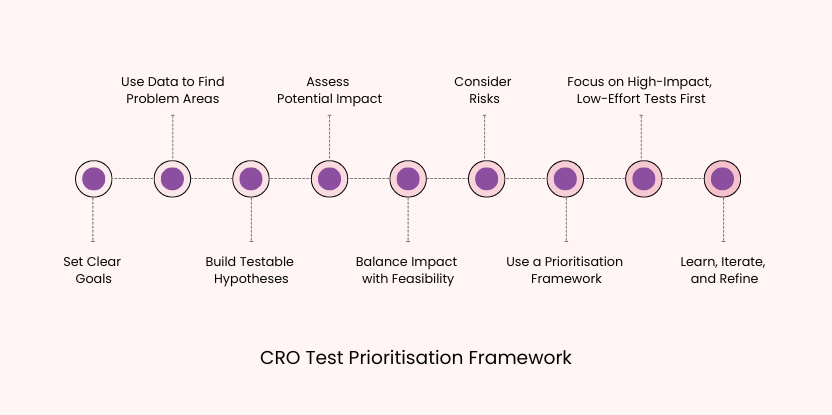

CRO Test Prioritisation Framework

To summarise, here’s a structured approach to prioritising experiments:

By following this framework, you can run smarter, more effective CRO experiments—focusing on the changes that drive growth.

Conclusion

This guide gives you a clear framework for prioritising CRO experiments—focusing on high-impact opportunities, structured testing, and data-driven decisions. At Proof3, we’ve run countless experiments for eCommerce retailers like Tile Giant, Jaguar Land Rover, Just Kampers and many more, delivering measurable revenue growth while learning what truly moves the needle.

Not every test will be a winner, and that’s okay. The key is to learn from every experiment—successes and failures alike. Each test gives you a deeper understanding of your customers and what drives conversions. Over time, these insights stack up, leading to smarter decisions, stronger results, and a real impact on your bottom line.

As a reward for reading to the end…

Get a free GrowthGauge report of your website to give you an unbiased assessment of your website’s growth potential.

No obligations, and no catches – just clearly laid out achievable improvements with projected growth outcomes in specific timeframes.

Fill out the form below and we’ll take it from there.